This website uses cookies so that we can provide you with the best user experience possible. Cookie information is stored in your browser and performs functions such as recognising you when you return to our website and helping our team to understand which sections of the website you find most interesting and useful.

How to Implement OpenCV Computer Vision Algorithms in IoT Home Automation

Andrey Solovev

Chief Technology Officer, PhD in Physics and Mathematics

Anna Petrova

Writer With Expertise in Covering Electronics Design Topics

The latest advances in computer vision are making our life more and more automated—its algorithms can run homes, drive cars, make diagnoses, and assist people in many other ways. OpenCV is a computer vision library that offers a rich selection of algorithms used for object detection, face recognition, image restoration, and many other applications. Computer vision-based people detection is one of the library’s features which is particularly popular in smart home projects. This article will tell you about OpenCV and how you can use it to detect people in an Internet of Things (IoT) home automation system.

OpenCV: Overview of the Computer Vision Library

Computer vision (CV) is a subset of artificial intelligence that deals with detecting, processing, and distinguishing objects in digital images and videos. Building computer vision applications comes with a variety of solutions and technologies, including libraries, frameworks, and platforms. OpenCV is one of these solutions. It’s a set of libraries with over 2500 tools that vary from classical machine learning (ML) algorithms, such as linear regression, support-vector machines (SVMs), and decision trees, to deep learning and neural networks.

OpenCV is an open-source solution that can be freely used, modified, and distributed under the Apache license. The library was initially introduced by Intel in 1998, and the corporation has been supporting OpenCV and contributing to it ever since. For example, the company added the Deep Neural Network (DNN) module to OpenCV 3.3. It allows users to train neural networks on deep learning frameworks and then port these networks to OpenCV for further actions.

The library is compatible with an array of operating systems, including Windows, Linux, macOS, FreeBSD, Android, iOS, BlackBerry 10, and supports software written in C/C++, Python, and Java. It has strong cross-platform capability and compatibility with other frameworks. For example, you can easily port and run TensorFlow, Caffe, PyTorch, and other models in OpenCV with almost no adjustments.

OpenCV comprises a broad range of modules intended to process images, detect and track objects, describe features, and perform many other tasks. Here are some of the modules:

- core. Core functionality

- imgproc. Image Processing

- videoio. Video I/O

- video. Video Analysis

- objdetect. Object Detection

- ml. Machine Learning

- stitching. Images stitching

The OpenCV library is equipped with the GPU module that provides high computational power to capture videos, process images, and handle other operations in real-time. The OpenCV GPU module enables developers to create advanced algorithms for high-performance computer vision applications.

OpenCV has a large worldwide community. Dozens of thousands of AI scientists, researchers, and engineers have been providing valuable insights into the library for more than 20 years.

You can also read our Guide to the OpenCV Library in Infographics.

OpenCV Applications

OpenCV is a multifunctional and multipurpose solution found in a wide spectrum of applications. For example, it helps robots and self-driving cars navigate and avoid obstacles using object detection and tracking features. Computer vision is widely applicable in industrial application development for visual inspection, product grading, and quality control. Logistics applications can also use computer vision algorithms for efficient forecasting and planning, predictive maintenance improvement, and route optimization. Let’s consider some more OpenCV applications in various industries.

Healthcare

In healthcare, solutions based on OpenCV can identify diseases and potential health threats by using detection and classification algorithms to analyze the pictures of patients’ tissues and organs. Our team used the OpenCV library in the SkinView project: we developed an iOS app for identifying melanoma skin cancer. The app can detect the edges of a mole, define its color, shape, and other parameters and tell if the mole is malignant. The results are sent to a doctor. The team managed to reduce data processing time to less than 0.1 seconds and achieve diagnostic accuracy of 80%.

Autonomous Vehicles

Using computer vision along with multiple sensors and cameras can help vehicles navigate either in the air (unmanned aerial vehicles) or in city traffic (self-driving cars). OpenCV offers plenty of algorithms to detect, classify, and track people and objects. These algorithms allow a vehicle to identify traffic signs, lane lines, pedestrians, and other cars, thus guiding it safely to its destination.

Augmented Reality

Computer vision integrated into mobile software can power augmented reality (AR) apps, enabling users to combine virtual and real-world objects. AR solutions based on OpenCV algorithms can visualize architecture and design projects, improve the learning process at schools and universities, and assist businesses in promoting their products and optimizing customer experience.

Facial Recognition

A facial recognition system detects, classifies, and recognizes people by their facial features. This technology has widespread use in different applications, including photo and video editing apps and social media. OpenCV human face detection algorithms can help authenticate smartphone users, verify identities in entry systems, and keep people safe in public gathering places.

OpenCV in IoT Home Automation

Smart homes are the Internet of Things systems that assist people in running household functions. The networks of IoT devices can control lights, regulate indoor temperature, water plants, and turn on the TV. Computer vision is one of the technologies that make homes intelligent—for example, a smart fridge can use cameras to check food supplies. Developers can leverage a large variety of computer vision algorithms and tools available in the OpenCV library to create smart home projects.

Providing security is an integral part of IoT home automation. Thus, smart security solutions can assist parents in looking after their kids. Deploying computer vision applications for people detection improves safety in many alarm and video intercom systems. Implementing OpenCV face recognition can prevent strangers from entering a house or apartment.

Besides intruder protection, computer vision can be used to assist people with health issues, especially the ones who live alone and may not be able to take care of themselves. Such systems can monitor elderly people with disabilities. With the help of OpenCV algorithms and neural networks, they can detect emergencies and alert relatives or caregivers. Here, we’ll share our personal experience in building a remote monitoring system for real-time human detection with OpenCV.

Using OpenCV for Human Detection in Smart Homes

Within the Algodroid project, our main task was to create computer vision algorithms for detecting people. The customer intended to integrate the solution into an IoT system to recognize life-threatening situations and ensure the safety of elderly people who live alone. The system had to:

- detect a human;

- identify a posture;

- detect a fall;

- distinguish between a fall and sleep or rest;

- warn of the dangerous condition.

As a multi-feature cross-platform library with high processing speed, OpenCV was a suitable option to complete the above tasks.

Our project employed OpenCV human detection algorithms and neural networks to track and analyze people’s activities throughout the day. We used datasets provided by the client and created our videos to help the model differentiate between human postures. However, the amount of data was insufficient for effective training and understanding the context. So, we broke the task down into 2 steps.

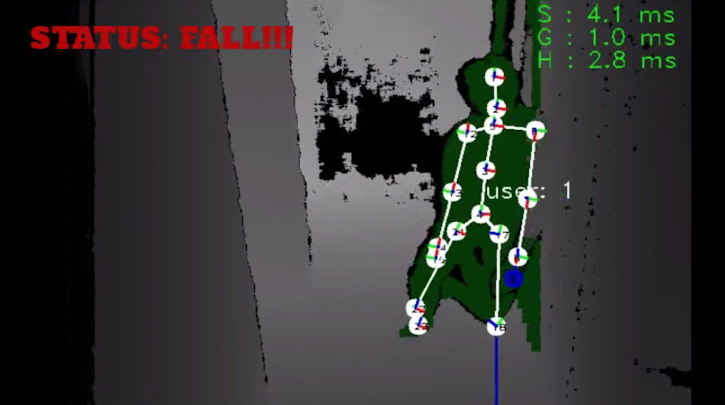

During the first phase, the team used OpenCV to implement computer vision for detecting people and visualizing skeletons. With the help of freely available data, we built and trained neural networks capable of detecting the human body. We used TensorFlow-based BodyPix to segment a human skeleton. This open-source machine learning model segments a body into 24 parts and visualizes them as a set of pixels of the same color.

After that, the system determined the biomechanical data of the body: its geometry and movements. We used OpenCV motion tracking algorithms to calculate and classify these parameters.

To estimate the posture of a person, we turned to synthetic data generation. By using simulation libraries, we created a physical model of a human body based on real proportions, biometric and biomechanical data. We placed the model in a virtual environment and generated probable scenarios of human actions. For example, a person could walk some distance, then stagger and fall—all in all, there were about a hundred scenarios. Based on them, the algorithms learned to estimate the posture.

For the second step of the task, we built decision trees to match the estimated posture with the target state. The algorithms compared the posture with the patterns from the simulated scenarios and predicted falls.

We developed a communication system that collected data from all of the cameras that were installed in the house. After identifying a fall, it could send the picture and notify an emergency medical service for further assistance. We were also planning to design a custom Android tablet for the elderly to contact their relatives and get an online medical consultation.

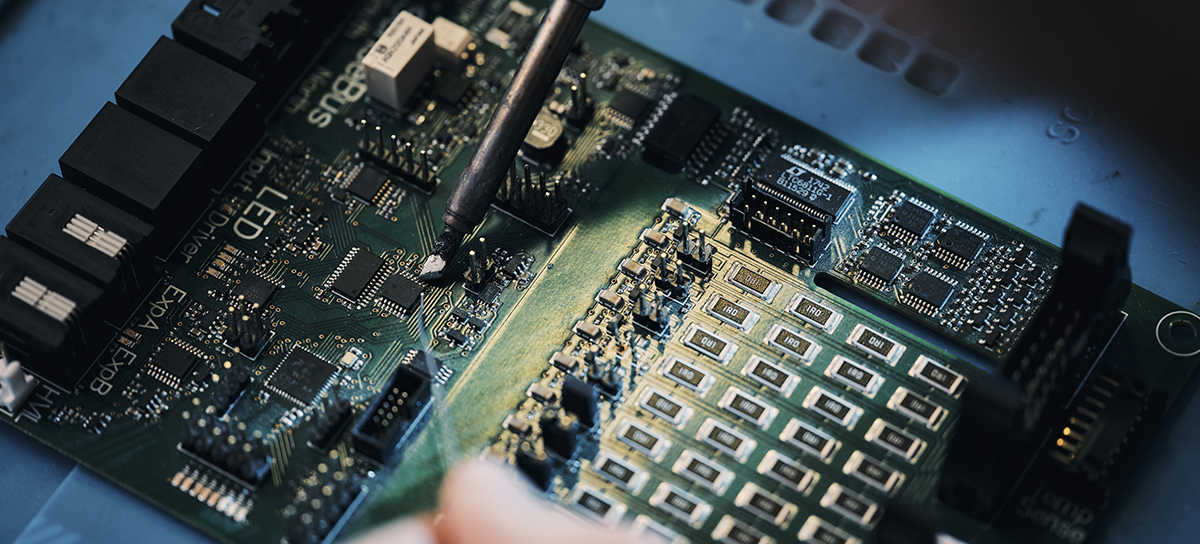

While working on this project, we tried different types of cameras—from simple RGB cameras to Orbbec Astra and Intel RealSense depth cameras. They tracked people during the day and sent images to a single-board computer (SBC) for processing. We used NVIDIA Jetson Xavier NX as the SBC.

Intel RealSense provided high-quality RGB-D images that simplified the work of computer vision algorithms. Depth cameras used infrared light and could detect events even in the dark, but they were too expensive and required more time to process the images. The cheapest solution was an RGB camera. Combined with BodyPix, it showed the same results as depth cameras but saved time on image processing.

The biggest advantage of using the OpenCV library for this project was the opportunity to combine different methods and approaches within one platform. Our fall detection system comprised both classical ML algorithms and deep learning. As a result, the system could detect 10 target states out of 10 activities. The DNN module allowed us to train neural networks on other frameworks and then successfully run them on OpenCV.

To protect personal data, minimize response time, and ensure the stability of our IoT network, we implemented edge computing. That way, we could process data and run algorithms directly on the SBC offline.

Challenges of Implementing Human Detection with OpenCV

The biggest challenge of any project related to real-time human detection in computer vision is reducing false positives and achieving high detection accuracy. We faced this challenge too.

Initially, we used simple OpenCV machine learning algorithms to differentiate postures, whether a person walks, lies down, or falls. Implementing Hidden Markov Models (HMMs) helped us predict a fall based on the previous behavior of a person. We detected body contours, defined the center of mass of the body, and analyzed the deviations from this data frame by frame. The more explicit the deviations, the higher the probability of falls.

At first sight, this approach seemed to work, but finally, it proved too inefficient—the system detected a fall when a person was sitting down or just leaning forward. In addition, contour detection depended on the lighting in the room: we couldn’t detect people using computer vision in poor lighting. As a consequence, the number of false positives was too high, so we had to give up this idea despite the inexpensiveness and simplicity of use.

To achieve better accuracy in human body detection, we decided to build neural networks based on biometric parameters and biomechanical data. To train the models, we generated synthetic datasets with the help of simulation services. Unity, Gazebo, and other simulator platforms provide test environments that simplify and speed up the training process.

Implementing people detection using computer vision can get more complicated in indoor spaces, such as homes. A person can be hidden behind a piece of furniture, so the camera will not capture the entire body and therefore the system will not get the complete biometric data. After trying different approaches, we combined neural networks with decision trees—classical machine learning algorithms included in OpenCV motion detection. These algorithms have significant advantages: they are fast and easy to understand, they can learn from small amounts of data or when some data is missing.

Conclusion

Smart home IoT networks make people’s lives easier in many respects. They automate daily routines, control energy, and ensure the safety of the household. Modern smart homes often rely on machine learning and artificial intelligence technologies. For example, remote monitoring systems can be based on computer vision algorithms for people detection.

A distant monitoring system integrated into a home automation solution can look after elderly and disabled people who live independently. Cameras track people’s activities, and algorithms analyze their behaviors, identifying emergencies.

OpenCV is a popular choice for IoT home automation projects with computer vision. It is an open-source ecosystem of tools, algorithms, and neural networks that perform many functions. OpenCV is one of the libraries we use for building CV applications. For example, OpenCV motion detect algorithms can identify and track people in real-time. If you want to create a smart solution using the latest advances in computer vision or AI, drop us a line and share your thoughts.

Share this article

Related

materials

Computer Vision System for Monitoring Sports Facilities

A computer vision-based system takes into account the number of people playing sports on the field and provides analytics by...

LEARN MORE

LEARN MORE

IoT Product Development: Technologies, Trends 2023, and Market Overview

Connected devices allow consumers to make their lives more comfortable and companies to improve workflows and increase productivity and customer...

LEARN MORE

LEARN MORE

The Infographic Guide to the OpenCV Computer Vision Library (and Its Implementation in Smart Homes)

OpenCV is a wellspring of computer vision algorithms. Learn about their applications and implementations in our infographics

LEARN MORE

LEARN MORE