This website uses cookies so that we can provide you with the best user experience possible. Cookie information is stored in your browser and performs functions such as recognising you when you return to our website and helping our team to understand which sections of the website you find most interesting and useful.

Algodroid R&D Project: Computer Vision System for the Elderly

When people grow old, falls can be extremely dangerous. They are often the result of a sudden medical condition, such as stroke, seizure, or heart attack. The problem is especially acute for people who live alone: once they fall, a significant amount of time can pass before they receive assistance.

To reduce the risk of falls, numerous companies are working on technology solutions for fall prevention. These solutions include wearable devices, such as medical bracelets, reaction-based alarms, virtual sitters, video monitoring systems, and even smart shoes.

Request

One of our clients, a Belgium-based startup called Algodroid is working on technology that uses cameras to detect falls in the homes of the elderly. They turned to us to help implement their solution.

The problem Algodroid intended to solve with technology wasn't a trivial one. To build a system for fall detection we needed to implement a set of intelligent algorithms that would be able to:

- Recognize a human posture (sitting, walking, lying down, falling)

- Detect a human in a frame

- Detect the occurrence of a fall

- Differentiate between an incident of falling and the process of lying down

Solution

Because of the complexity of the project, we offered Algodroid our Research & Development collaboration model that entails scientific research and project feasibility evaluation. We did thorough research in the area of computer vision and machine learning and are currently working on the implementation of artificial vision algorithms that enable video data collection and analysis.

Result

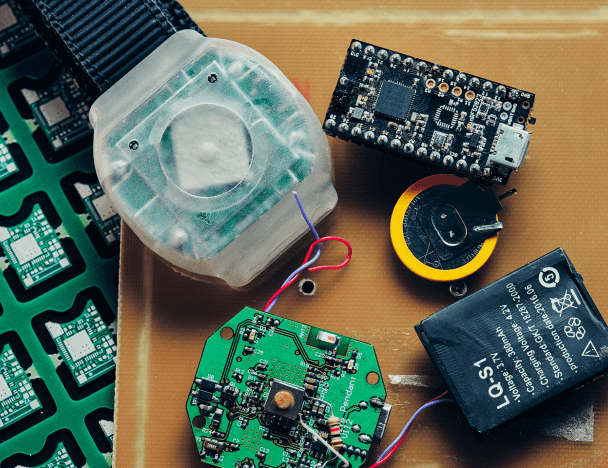

The video monitoring system for fall prevention we at Integra Sources are currently working on can be broken down into four parts:

- 3D cameras to track older people's activities throughout the day

- Single board computer for processing data from the cameras.

- Artificial vision algorithms to recognize human postures and detect if a person has fallen.

- A communication system that sends an alarm message to caregivers along with a picture once a fall is detected.

Scope of work

- Picked out a 3D camera with depth sensing to identify the object's state in the dark.

- Developed computer vision algorithms in C/C++ using OpenCV. These algorithms can identify the person's state and distinguish falling from lying down.

- Built a communication system that gathers information from all the cameras installed in the house.

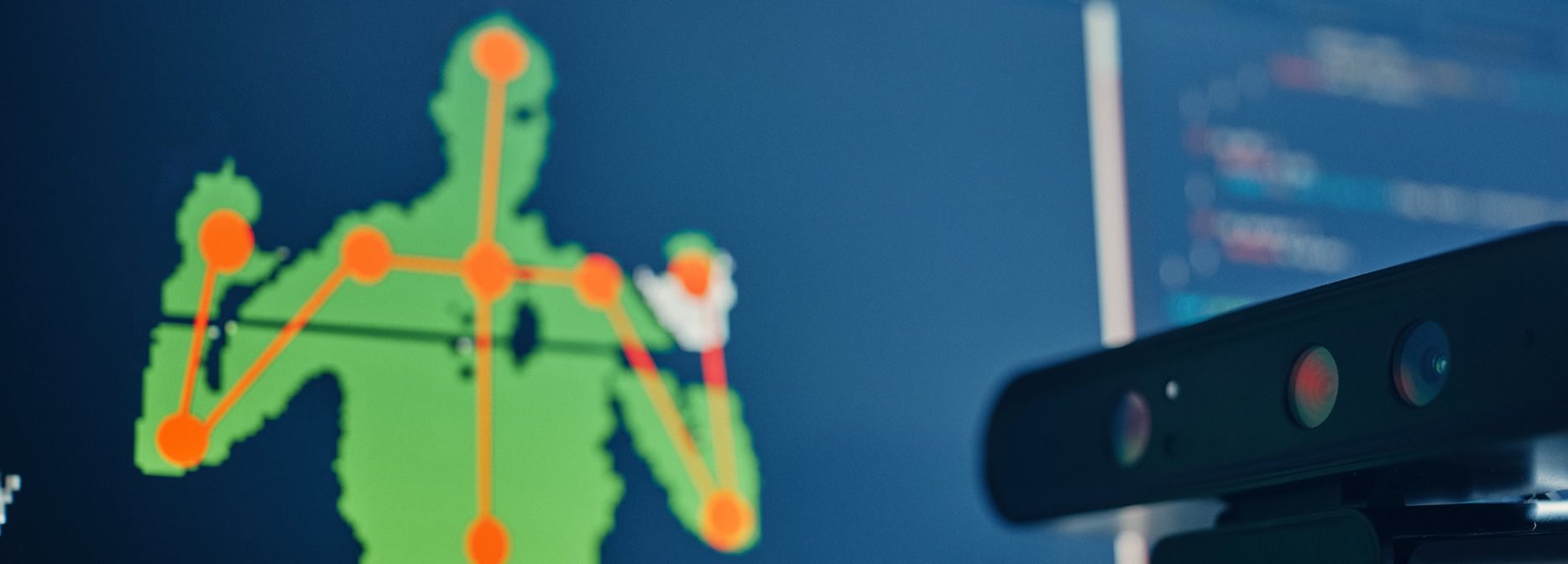

3D camera

To make the solution work properly, cameras need to be installed in several rooms to cover the whole area where an elderly person is living. But what sort of camera could we use to obtain real-time information through the video analysis?

At first, we decided to use an open source machine vision camera. It could run machine vision algorithms for object detection and tracking, scene recognition, and object recognition using deep neural networks. But it also had a number of disadvantages:

- Insufficient FPS rate at low resolutions

- A weak processor which didn’t allow us to run complex image processing algorithms

- UART is the only interface for communication

- Very noisy cooler

Using machine vision algorithms for this project entailed certain limitations. For example, we can’t detect events if the light in the room is too dark. In addition, it’s difficult to identify a person on a background if this person is wearing clothes of a similar color to that of the background.

We needed a better solution. The camera we were looking for needed to be able to “see” the room in 3D regardless of the lighting conditions. The examples of such cameras are Orbbec 3D and Intel. They both carry out active depth sensing using infrared light, so they work in the dark.

A 3D camera provides video representation using depth maps – an image that contains information relating to the distance of the surfaces of scene objects from a viewpoint. It sends these data to the single board computer that runs intelligent algorithms to extract a person from an image and identify his or her pose.

We chose Orbbec 3D for this purpose but we’re also considering building a custom camera that encodes the depth of the scene and uses Wi-Fi to stream data instead of using USB cables.

Artificial vision algorithms

Our system uses a number of complex computer vision algorithms, the main purpose of which is to distinguish subjects in a fallen state. We built these algorithms in C/C++ using OpenCV. The algorithms implement the following tasks:

- Finding a human contour in the sensor data. Because the processing power of the single board computer installed at home is limited, we needed to avoid using resource-intensive machine learning methods. That's why we implemented this algorithm using a background subtraction method which compares frames and finds the differences between them.

- Identifying a person's state (sitting, walking, lying, falling) and distinguishing falling from lying down. The implementation is based on the Hidden Markov Model which helps to predict the probability of a fall based on the previous states of the body and its transitions. This model helps to predict what type of event (fall or normal state) a current position of a human body can be attributed to.

- Tracking in real-time the center of body mass, walking speed, and footprint parameters to get the maximum possible information about the current event.

- Filtering sensor data to reduce the environmental impact on image recognition quality.

- Implementing background subtraction algorithms and postural sway analysis.

- Counting the number of people in a room. For this, we used machine learning techniques such as the combination of the descriptor of the histogram of the oriented gradients (HOG) and a classifier based on the support vector method (SVM). We also worked on the "regions of interest", or special areas in the room. This helped us understand the everyday activity of a person and trigger specific events if some actions in a "region of interest" take more time than usual. For example, a sofa is an area we needed to pay more attention to compared to a closet.

Communication system

Once a fall is detected, our system needs to let the caregivers know about it. We're working on a special computational unit that gathers information from all the cameras installed in the house and when a fall occurs, it sends a signal to the call center.

When the connection with the call center is set, the operator asks the person who fell how they feel and can send medical assistance to their house. They can also call this person's relatives to let them know about the incident. We're planning to implement a special device like an Android tablet in the form of a photo frame. It will be fully customized to our needs and will represent a user interface through which older people can communicate with their relatives, connect with doctors, and get useful content like exercise videos.

Technologies Used

- C++ language in combination with OpenCV library has been used for algorithms prototyping and receiving PoC.

- We used JeVois smart machine vision camera as a compact and cheap solution that could be integrated into an MVP product.

- Depth cameras, such as Intel RealSense and Orbbec Astra have been used for distance estimation to an object under observation.

- We used Nuitrack library for working with depth channel.

- BodyTracking algorithms have been used for obtaining information related to a human's silhouette.

- Banana Pi Media Board Computer has been used as an RTSP server for streaming frames received from Astra camera.

- RTSP server has been implemented with the help of C++ language in combination with live555 library.

- Qt Framework has been used for developing a cross-platform GUI application for further interaction with Banana Pi board.

INTEGRA maintains a patient and helpful approach, and there have been no communication issues amidst time differences. They’ve provided regular and comprehensive progress updates to ensure accuracy, as well as constant resource availability, throughout the ongoing project.

You might also like...

SkinView iOS App for Identifying Skin Cancer with Computer Vision

The app utilizes computer vision algorithms to analyze moles for melanoma detection, achieving 80% diagnostic accuracy and rapid processing times under 0.1 seconds.

LEARN MORE

Medical Alert Bracelet That Delivers Assistance at the Touch of a Button

A bracelet designed for patients at military hospitals and clinics in the USA. It notifies hospital staff about an emergency with the patient.

LEARN MORE